Cargo Cult Learning Algorithms in Chinese Rooms

Posted: 2019-01-27. Updated: 2019-02-03.

In this post, we'll learn how to win at chess, deliver goods to the Pacific, speak a foreign language, and do machine learning in a world where correlation equals causation. On the side, I hope to provoke some thought about what neural networks might be achieving under the hood, how we could tell, and whether it matters.

Let's start with a thought experiment.

1. The Prodigy

Say you meet a promising young chess player. You ask why she made a certain move and she says "I don't know, I just saw some grandmaster games where they made moves like that and figured it might work for me too." You ask about other moves, but the answers are all the same. Okay, fair enough. You shrug and go back to your day job.\footnote{In this story, your day job is operating construction equipment for a contractor in South America, but it turns out not to be relevant.}

A few years later, that player is now best in the world. Her play shows deep calculation and brilliant positional sacrifices. And she can't be imitating other grandmasters anymore, because she's better than all of them. So you ask again: how does she pick her moves?

She says, "Since I'm the best, I just practice against myself. And I watch what moves normally work out well for me, and during the world championship, I try to make moves that look like those moves. You know, just sort of guess what I would normally play and then play that."

You smile, nod, go home, and sell your chess set on craigslist.\footnote{You actually take up flamenco guitar for a while before getting into tai chi.}

2. How to Code a Cult

Of course, I'm stereotyping the way that modern neural-network-based machine learning algorithms tend to learn, in particular self-play chess engines AlphaZero\footnote{https://en.wikipedia.org/wiki/AlphaZero} and the open-source Leela Chess Zero\footnote{https://en.wikipedia.org/wiki/Leela_Chess_Zero} (but keep other ML problems such as image recognition in mind). Like the player, these algorithms view many thousands of games (or labeled images) and, rather than explicitly trying to build understanding, seem to magically achieve it by learning to mimic success. So let's analyze the thought experiment, then come back to ML.

Here's the first point. Feynman coined the term cargo cult science in his 1974 Caltech commencement address:\footnote{https://en.wikipedia.org/wiki/Cargo_cult_science. Read the commencement address here: http://calteches.library.caltech.edu/51/2/CargoCult.htm}

"In the South Seas there is a Cargo Cult of people. During the war they saw airplanes land with lots of good materials, and they want the same thing to happen now. So they’ve arranged to make things like runways, to put fires along the sides of the runways, to make a wooden hut for a man to sit in, with two wooden pieces on his head like headphones and bars of bamboo sticking out like antennas—he’s the controller—and they wait for the airplanes to land. They’re doing everything right. The form is perfect. It looks exactly the way it looked before. But it doesn’t work. No airplanes land. So I call these things Cargo Cult Science, because they follow all the apparent precepts and forms of scientific investigation, but they’re missing something essential, because the planes don’t land."

In my story, the prodigy doesn't attempt to understand why some moves lead to wins and some lead to losses. She just tries to mimic good players closely\footnote{Including haircut and clothing. I left that out of the story.} in the hopes that it will work for her too.

So let's define: A cargo cult learning algorithm is one that uses purely correlation-based inference to drive causal decisionmaking. It takes in data of the form "when A happens, B tends to happen" and naively uses it to make decisions of the form "guess that A causes B", or "choose A in the hopes of causing B".\footnote{Let me emphasize I do not want to talk about whether current machine learning research is full of cargo culting. (Well, I do, but I won't.) I want to talk about the behavior of learning algorithms themselves, especially overparameterized universal function approximators.}

I'll get back to "naively" at the end. But the weird thing is, even if she's running a cargo cult algorithm, it works. The planes land. Even weirder, when she's the best in the world, she keeps improving by cargo-culting her own past successes, so to speak. How?

3. Revisiting the Chinese Room

The second point of the experiment is that, regardless of whether or not the prodigy "understands" the game of chess, or why certain moves are good, she plays like she does.

This is reminiscent of the Chinese room thought experiment\footnote{https://en.wikipedia.org/wiki/Chinese_room}. A room holds a perfect lookup table for responding to any Chinese query in Chinese. Inside, a non-Chinese-speaker such as myself receives queries from the outside, and responds by looking up the answers. From the outside, the Chinese room seems to be holding an intelligent conversation even though the person inside (me) has no idea what is going on.\footnote{As usual!}

The Chinese room experiment is supposed to provoke some deep discussion about the nature of consciousness.\footnote{If you believe in consciousness. (Ooooh.)} For our purposes, the point is that if you can only test the inputs and the outputs, then it seems like you might as well believe that the person in the room understands Chinese. Similarly, we might as well say the prodigy "understands" chess, in fact, better than anyone else.

4. The Emperor Needs No Clothes

So here's my point: the prodigy is running a cargo cult algorithm from inside a Chinese room. From the inside, she's just imitating moves good players make, with (in some sense) no idea why they work. From the outside, she is apparently the most knowledgeable player of all time.

How is this possible? Well, imagine that, in chess, there was no practical difference between moves that are correlated with winning in a position, and moves that cause one to win.\footnote{To me, this seems like it might be true about chess, but I'm a patzer.} It would be like a world where, as long as the runway was realistic enough and the headphone angle just right, the plane would come land after all.

Let's give this phenomenon a name, after Arthur C. Clarke's famous adage.\footnote{You can find his three laws here but in the context of algorithms, maybe we can tack the fourth law onto these three too.}

(The fourth law) Sufficiently accurate correlation is indistinguishable from causation.

For example, if A and B always co-occur, then we might as well predict that A causes B; it doesn't matter if it's true or not for accuracy purposes.

Let's say that a problem like chess is a fourth-law domain if, for practical purposes, the fourth law applies there. In a fourth-law domain, it would be possible to have no idea what makes planes come and go, yet be able to predict their landing perfectly. The point of my thought experiment is that, in a fourth-law domain, a cargo cult algorithm works perfectly. Mimicking actions correlated with success leads to success. Our chess prodigy would be considered the most knowledgeable player of all time; from outside a Chinese room, who could object? The difference would be (and I apologize to the philosophers) purely philosophical.

5. Inception

The next part works best if you read it asleep:

(in Leo's voice) What if modern neural networks are mostly running cargo cult algorithms? And what if we mostly tested them in fourth-law domains? Would that performance be "real"? Would we be able to tell if it weren't? Is the distinction even meaningful?

These are not rhetorical questions. I'm seriously asking. The recent success of machine learning is undeniable. AlphaZero is great at chess, neural networks are great at image labeling. But the implications are still not clear.

In fourth-law domains, cargo-cult algorithms would look like Chinese rooms to us. The questions go in, the right answers come out. We might as well assume the thing in the room has a deep understanding of the problem domain. We might as well hope this understanding can generalize to simple perturbations of the problem or counterfactuals.\footnote{If you're in academia, then the difference between benchmark and real-world performance is purely academic.} We might as well write lots of articles about how AI is ready to eat the world.

6. The Emperor Feels Cold

Maybe we could tell.

If you follow machine learning, you've seen this one before. With "adversarial examples", image-recognition algorithms can be easily convinced to make all kinds of hilarious mistakes, such as mistaking a cat for guacamole\footnote{See this nice article: https://www.theregister.co.uk/2017/11/06/mit_fooling_ai/}, a toy turtle for a rifle\footnote{https://www.youtube.com/watch?v=YXy6oX1iNoA}, or a stop sign for a speed limit\footnote{https://arxiv.org/abs/1707.08945}.

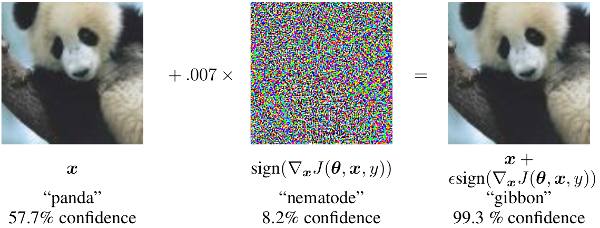

After adding imperceptible noise, the algorithm incorrectly guesses "gibbon" with high confidence. From Goodfellow, Shlens, and Szegedy 2014, https://arxiv.org/abs/1412.6572

With that in mind, let's go back to cargo cults. Imagine you took people from a cargo cult and showed them photos of a bunch of different places around the world, and asked them which ones the planes would land in. They would do great. You could create an MNIST or ImageNet\footnote{Two popular image-recognition benchmark datasets. http://yann.lecun.com/exdb/mnist/, http://www.image-net.org/} of airports and non-airports and those folks would score 99.7% "Air Traffic Controller Level" performance. But they're wildly mistaken about what airplanes and aiports actually are.

That's a cargo cult algorithm. That's using purely correlational data to derive conclusions about how the world works. And as long as you only test it in fourth-law domains, all the right answers come out. But then you ask a question that illuminates those conclusions, and the room starts speaking nonsense.

For example, it would be like someone observing photos of pandas and gibbons and concluding that any photo with certain low-pixel-value patterns will turn out to be a gibbon. They keep thinking this even if everything important about the photo is changed to a panda. They're totally correct about past correlations, but incredibly wrong about what matters in determining the right label. That's a cargo cult algorithm.

Or it would be like someone who doesn't understand Chinese generating novel grammatical Chinese sentences\footnote{For some example work using neural nets to do this, see e.g. https://arxiv.org/abs/1705.10929.} that are relevant to what you're asking, but completely failing to make a coherent point or stay on topic. Or a phone that uses high-frequency cues to decode speech, so that if you take out the speech and just make inaudible squeaks, it still thinks you're ordering pizza.\footnote{See the "Dolphin attack" paper https://arxiv.org/abs/1708.09537.} That (no matter how accurate) is a cargo cult algorithm.

7. Long Live the Emperor

Given the amazing performance of modern ML algorithms in domains like generative examples\footnote{Check this out: http://www.codingwoman.com/generative-adversarial-networks-entertaining-intro/}, it seems clear they "learn" a lot about their problem domains. But this is a paradox, because they also clearly have no flippin' clue what a panda actually is.\footnote{It's sort of the opposite of what sketches and cartoonists can do, which as Granny Aching says in Pratchett's "A Hat Full of Sky", "Tain't what a horse look like, but it's what a horse be."} (Or a cat, or a stop sign, or...)

Here is a possible resolution of the paradox: neural networks are substantially cargo cult algorithms, and so far, they work great, but only in fourth-law domains. Could that be true? What would the implications be? Is it meaningful, falsifiable? These are the questions I want to leave you with.

Maybe there are some cases (perhaps chess) that truly are pure fourth-law domains. Maybe some problems (perhaps voice and image recognition) can vary sharply depending on whether you just want to identify landing sites, or land the planes yourself.

Regardless, it raises the question of how to rigorously test "understanding" of a problem domain. Humans are easily fooled by visually impressive demonstrations (realistic-seeming conversations, etc). We tend to believe that understanding correlation implies understanding causation. So we must continue exploring how to rigorously evaluate the limitations of our algorithms. (For example, I think the fairness, transparency, and interpretability concerns\footnote{See e.g. https://fatconference.org/} of many researchers is related to the problems that could arise when applying cargo-cult algorithms to more complex real-world settings.)

Of course, these philosophical questions will probably not stop corporations from deploying these classifiers on 2-ton machines down the streets your children play on, in the hopes that sufficiently advanced correlation can substitute for causation. They may be right, I don't know. I just want to understand machine learning.

8. Postsript: Caveats and Directions

These concerns only apply to a small subset of algorithms (but ones that have gotten the most attention recently). Much of ML attempts to explicitly capture structure or e.g. causal relationships. This is why my definition of cargo cult algorithms includes the "naive" qualifier -- we have studied how to deduce structure from purely observational data for a long time in stats, ML, and e.g. econometrics.\footnote{For example you can watch Athey's recent tutorial.}

I've also ignored bandit and reinforcement learning algorithms that seem to explicitly learn causations (e.g. they conduct randomized experiments). But I wonder if even many of these (especially utilizing deep networks) mostly exploit the fourth law.

I've often used the word understanding without being able to technically define it or even know it when I see it. How could an algorithm convince you it understands pandas? Let's call that a challenge for the reader. I've also confused the difference between structure of a learning problem and causal relationships (but it's confusing: doesn't the animal "cause" the pixels?). For the purposes of everything I've said, both "structure" and "causal relationships" work as counterpoints to memorizing correlations.

If modern learning algorithms do have these drawbacks, this is only a good sign for research opportunities and breakthroughs on the horizon. Hopefully future research will answer these and all of humanity's questions. In any case, I hope this article helped in computing your own personal gradient, and good luck with your next steps! \footnote{Admit it, that one was pretty good.}

Thanks to Akshay Krishnamurthy for feedback on an earlier version!